Chapter 3 The law of mass action: animals collide

Infectious diseases have been an unpleasant companion of humankind for millions of years. Yet, crowd epidemic diseases could have only emerged within the past 11,000 years, following the rise of agriculture. The ability to maintain large and dense human populations, as well as the close contact with domestic animals, allowed the most deadly diseases to be sustained unlike when human populations were sparse [135].

Perhaps the most-well documented epidemic outbreak in ancient times is the plague of Athens (430-427 BCE) that caused the death of Pericles and killed around 30% of Athens population [136]. The fact that some diseases were contagious was probably well-known way before that. For instance, it has been claimed that in the 14th century BCE the Hittites sent rams infected with tularemia to their enemies to weaken them [137] and there are evidences of quarantine-like isolation of leprous individuals in the Biblical book of Leviticus. Yet, it was thought that diseases were caused by miasma or “bad air” for over 2,000 years. It was not until the end of the XIX century that it was finally discovered that microorganisms were the cause of diseases [138].

The advent of modern epidemiology is usually attributed to John Snow who in the mid of the XIX century traced back the origin of a cholera epidemic in the city of London [139]. However, mathematical methods were not firmly introduced until the beginning of the XX century8. Already in 1906 Hamer showed that “an epidemic outbreak could come to an end despite the existence of large numbers of susceptible persons in the population, merely on a mechanical theory of numbers and density” [142]. Although it was thanks to the works by Ross, Kermack and McKendrick that finally a mechanistic theory of epidemics was developed as an analogy to the law of mass-action. In particular, it was McKendrick who gave the title to this chapter when he said in a lecture in 1912: “consider a type of epidemic which is spread by simple contact from human being to human being […] The rate at which this epidemic will spread depends obviously on the number of infected animals, and also on the number of animals that remain to be infected - in other words the occurence of a new infection depends on a collision between an infected and uninfected animal” [143].

The next 50 years were mostly devoted to establishing the mathematical foundations of epidemiology. The problem was that this process was mostly done by mathematicians and statisticians, who were more interested in the theoretical implications of the models rather than in their application to data [144]. This situation changed during the 1980s when Anderson and May, coming from a background in zoology and ecology respectively, started to collaborate with biologists and mathematicians, bridging the gap between data and theory [62]. During the 1990s graphs where introduced in epidemiological models, challenging the classical assumption of homogeneous mixing (that we will discuss in section 3.1), and brought physicists into the field attracted by the similarity of some concepts with phase transitions in non-equilibrium systems [145].

The latest developments of epidemic modeling are based on incorporating more and more data. For instance, the Global Epidemic and Mobility (GLEaM) framework incorporates demographic data of the whole world with short-range and long-range mobility data as the basis of its epidemic model, allowing for the simulation of world-wide pandemics [146]. Similarly, to properly study the spreading of Zika virus it is necessary to take into account the dynamics of mosquitoes, temperature, demographics and mobility, attached to the disease dynamics of the own virus [147]. Multiple sources of data are also being used in the study of vaccination, either to devise efficient administration strategies, including economic considerations [148], or to properly understand how they actually work [149]. Even more, of particular interest nowadays is analyzing the interplay between processes that have been deeply studied in complex systems such as game theory, behavior diffusion and epidemic processes.

Herd immunity, a term coined by Topley and Wilson in 1923 albeit with the completely opposite meaning to the current one [150], refers to the fact that it is possible to have a population where diseases cannot spread even if only a fraction of the individuals are immune to it. Firstly calculated theoretically in 1970 by Smith [151], it has been the subject of great interest as it allows to completely immunize a population even if there are members who cannot be administered a vaccine due to their medical conditions [152]. Unfortunately, the great successes achieved by vaccination are now endangered by people who refuse to vaccinate their children, which also affects those kids who cannot be vaccinated but should have been protected by herd immunity [153].

For instance, measles requires 95% of the population to be vaccinated for herd immunity to work. This was achieved in the U.S. by the end of the past century, being declared measles free in 2000. Similarly, the UK was declared measles free in 2017. Yet, the World Health Organization (WHO) removed this status from the UK in August 2019 [154], and the U.S. is facing a similar fate as so far in 2019 they have reported the greatest number of cases since 1992 [155]. Both phenomena have been attributed to anti-vaccine groups, whose behavior can be studied from the point of view of game theory. But there are more ingredients into play. In particular, if the risk of infection is regarded low, maybe thanks to herd immunity, the motivation to become vaccinated can decrease. This behavior can then be spread among adults, a process that will be clearly coupled with the disease dynamics. Thus, a holistic view of the whole problem is needed, something that can only be done under the lenses of complex systems [156].

In this context, rather than extending the mathematical formalism that is already well established, we pushed forward our knowledge about disease dynamics by adding data and revisiting some of the assumptions classically made either for simplicity or lack of information. For this reason, rather than giving a whole mathematical introduction and then visiting each contribution, we will organize them in a way that roughly follows the historical development of mathematical epidemiology, explaining in each section the basic ideas and then showing how we challenged those assumptions.

We will begin in section 3.1 with the most basic approach to disease dynamics. That is, humans are gathered in closed populations in which every individual can contact every other, very much like particles colliding in a box. This simple premise, known as homogeneous mixing, can be slightly improved by considering individuals to be part of smaller groups, with correspondingly different patterns of interaction. This is the classical approach to introduce the age structure of the population, for which experimental data exist. We will, however, go one step further and analyze the problem of projecting this data into the future, taking into account the demographic evolution of society. This part of the thesis will be thus based on the publication:

Arregui, S., Aleta, A., Sanz, J. and Moreno, Y., Projecting social contact matrices to different demographic structures, PLoS Comput. Biol. 14:e1006638, 2018

Our next step will be to introduce, in section 3.2, one of the cornerstone quantities of modern epidemiology, the basic reproduction number, \(R_0\). We will revisit its original definition and challenge it using data-driven population models, demonstrating that some of the assumptions that have been made since its conception are not entirely correct. This corresponds to the work:

Liu, Q.-H., Ajelli, M., Aleta, A., Merler, S., Moreno, Y. and Vespignani, A., Measurability of the epidemic reproduction number in data-driven contact networks, Proc. Natl. Acad. Sci. U.S.A., 115:12680-12685, 2018

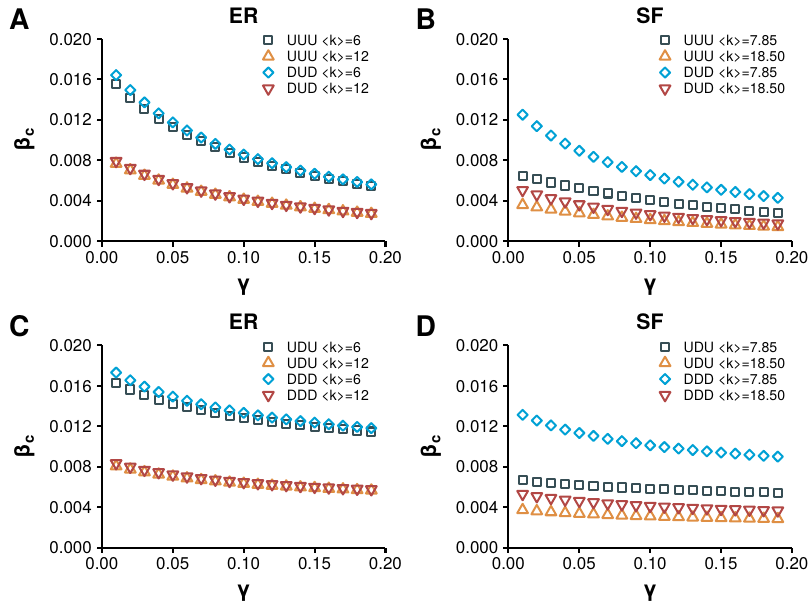

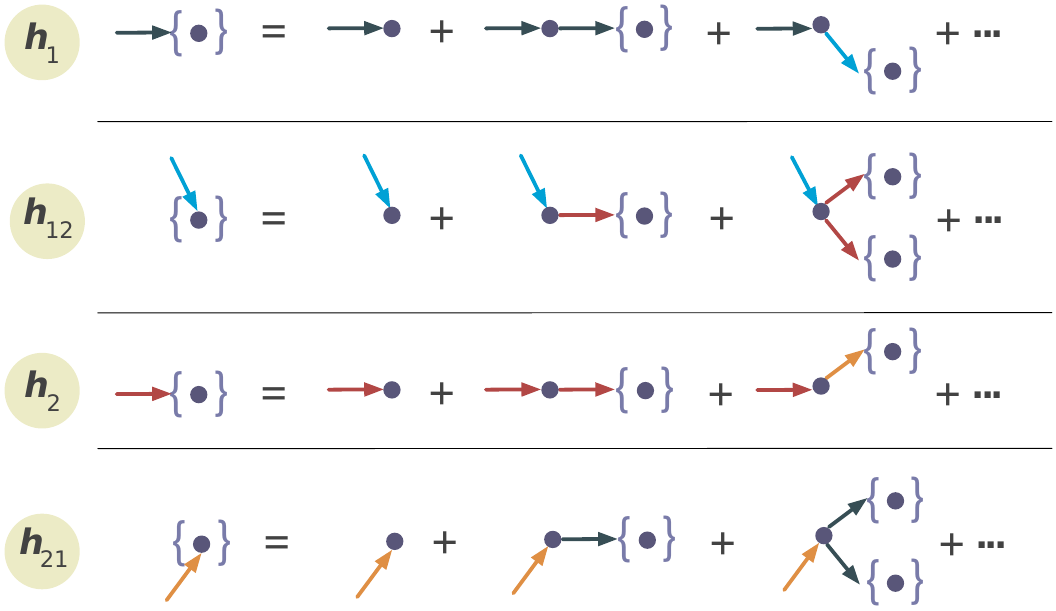

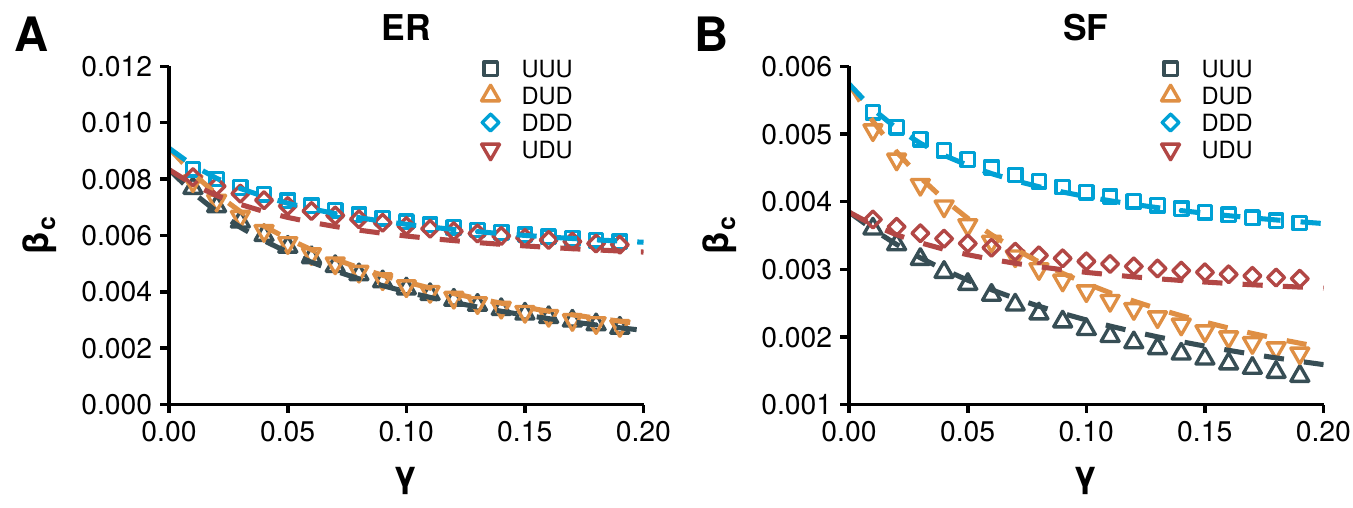

Then, in section 3.3 we will finally introduce networks into the picture. We will show some of the counter-intuitive consequences of this and, again, challenge some of the most basic assumptions. In particular, disease dynamics are often implemented on single layer undirected networks, but we will show that directionality can play a crucial role on the dynamics, with particular emphasis on multilayer networks. We will follow the article

Wang, X., Aleta, A., Lu, D., Moreno, Y., Directionality reduces the impact of epidemics in multilayer networks, New. J. Phys., 21:093026, 2019

of which I am first co-author.

We will finish this chapter in section 3.4 analyzing the age-contact networks that we generated in section 2.6, chapter 2. The objective of this part will be to show the different approaches than can be followed depending on the available data and their impact in the outcome of the dynamics. This will be based on the work

Aleta, A., Ferraz de Arruda, G. and Moreno, Y., Data-driven contact structures: From homogeneous mixing to multilayer networks, PLoS Comput. Biol. 16(7):e1008035, 2020

3.1 A basic assumption: homogeneous mixing

The starting point of this discussion is going to be precisely the own introduction of the paper by Kermack and McKendrick published in 1927 that is regarded as the starting point of modern epidemiological models [157]. Even if over 90 years have passed, any text written today about the subject would start roughly in the same way:

“The problem may be summarised as follows: One (or more) infected person is introduced into a community of individuals, more or less susceptible to the disease in question. The disease spreads from the affected to the unaffected by contact infection. Each infected person runs through the course of his sickness, and finally is removed from the number of those who are sick, by recovery or by death. The chances of recovery or death vary from day to day during the course of his illness. The chances that the affected may convey infection to the unaffected are likewise dependent upon the stage of the sickness. As the epidemic spreads, the number of unaffected members of the community becomes reduced. Since the course of an epidemic is short compared with the life of an individual, the population may be considered as remaining constant, except in as far as it is modified by deaths due to the epidemic disease itself. In the course of time the epidemic may come to an end. […] [This] discussion will be limited to the case in which all members of the community are initially equally susceptible to the disease, and it will be further assumed that complete immunity is conferred by a single infection.”

For the shake of clarity we can summarize some of the implicit assumptions in the previous paragraph, plus some more that were introduced in other parts of the paper, as [158]:

- The disease is directly transmitted from host to host.

- The disease ends in either complete immunity or death.

- Contacts are according to the law of mass-action.

- Individuals are only distinguishable by their health status.

- The population is closed.

- The population is large enough to be described with a deterministic approach.

In this section we will explore the effect of relaxing assumptions 3 and 4. Note that these two assumptions can be regarded as an approximation when no sufficient data about the whereabouts of the population are known. Now, however, we have much more data available than they did and thus in section 3.2 we will be able to completely remove assumption 4. Similarly, in sections 3.3 and 3.4 we will suppress assumptions 2 and 3. Besides, except for this introduction, throughout the chapter we will disregard assumption 6, but we will always respect the 1st and 5th ones.

With modern terminology, models in which individuals are only distinguishable by their health status are known as compartmental models. In these models, it is supposed that each individual belongs to one and only one compartment (class, in Kermack and McKendrick terms). Compartments are a tool to encapsulate the complexity of infections in a simple way. Hence, an individual that is completely free from the disease but can be infected is said to be in the susceptible state (\(S\)), one that can spread the disease is said to be infected (\(I\)) and one that can neither be infected nor infect is said to be removed (\(R\)) either because is immune or dead. This classification is known as the \(SIR\) model. This framework, however, is quite flexible and it is possible to incorporate as many compartments as needed, depending on the disease under study, reaching hundreds of compartments in the most sophisticated models [159]. In particular, in section 3.1.2, we will introduce the exposed (\(E\)) state to classify individuals that have been infected but are not yet infectious.

The six assumptions, after some algebra, lead to the original equation proposed by Kermack and McKendrick (albeit with slightly updated notation),

\[\begin{equation} \frac{\text{d}S(t)}{\text{d}t} = S(t) \int_0^\infty A(\tau) \frac{\text{d}S(t-\tau)}{\text{d}t} \text{d}\tau\,, \tag{3.1} \end{equation}\]

where \(S(t)\) denotes the number of individuals in the susceptible compartment - henceforth number of susceptibles - at time \(t\) and \(A(\tau)\) is the expected infectivity of an individual that became infected \(\tau\) units of time ago [157], [158].

To obtain \(A(\tau)\), we define \(\phi(\tau)\) as the rate of infectivity of an individual that has been infected for a time \(\tau\). Similarly, we define \(\psi(\tau)\) as the rate of removal, either by immunization or death. Let us denote by \(v(t,\tau)\) the number of individuals that are infected at time \(t\) and have been infected for a period of length \(\tau\). If we divide time into separate intervals \(\Delta t\), such that the infection takes places only at the instant of passing from one interval to the next, the following relation holds:

\[\begin{equation} \begin{split} v(t,\tau) & = v(t-\Delta t,\tau-\Delta t)(1-\psi(\tau-\Delta t)) \\ & = v(t-2\Delta t,\tau-2\Delta t)(1-\psi(\tau-\Delta t))(1-\psi(\tau-2\Delta t)) \\ & = v(t-\tau,0) B(\tau)\,, \end{split} \tag{3.2} \end{equation}\]

so that, if \(\Delta t\) is small enough,

\[\begin{equation} \begin{split} B(\tau) & = (1-\psi(\tau-\Delta t))(1-\psi(\tau-2\Delta t))\ldots(1-\psi(0)) \\ & \approx e^{-\psi(\tau-\Delta t)} e^{-\psi(\tau-2\Delta t)} \ldots e^{-\psi(0)} \\ & \approx e^{-\int_0^\tau \psi(a) \text{d}a}\,. \end{split} \tag{3.3} \end{equation}\]

Hence,

\[\begin{equation} A(\tau) = \phi(\tau) B(\tau) = \phi(\tau) e^{-\int_0^\tau \psi(a) \text{d} a}\,, \tag{3.4} \end{equation}\]

which defines the original shape of the Kermack and McKendrick model.

However, in the literature it is common to present as the Kermack and McKendrick model the special case they analyze in their paper in which both the infectivity and removal rates are constant. Indeed, if we set \(\phi(\tau) = \beta\) and \(\psi(\tau)=\mu\),

\[\begin{equation} A(\tau) = \beta e^{-\int_0^\tau \mu \text{d}{a}} = \beta e^{-\mu\tau}\,, \tag{3.5} \end{equation}\]

and defining the number of infected individuals at time \(t\) as

\[\begin{equation} I(t) \equiv -\frac{1}{\beta} \int_0^\infty A(\tau) \frac{\text{d}S(t-\tau)}{\text{d}t} \text{d}\tau \,, \tag{3.6} \end{equation}\]

equation (3.1) reads

\[\begin{equation} \frac{\text{d}S(t)}{\text{d}t} = - \beta I(t) S(t)\,. \tag{3.7} \end{equation}\]

If we now derive expression (3.6), using Leibniz’s rule,

\[\begin{equation} \begin{split} \frac{\text{d}I(t)}{\text{d}t} & = -\frac{\text{d}S(t)}{\text{d}t} - \int_{-\infty}^t \frac{\partial}{\partial t} e^{-\mu(t-\tau)} \frac{\text{d}S(\tau)}{\text{d}t} \text{d}\tau \\ & = - \frac{\text{d}S(t)}{\text{d}t} + \mu \int_0^\infty e^{-\mu \tau} \frac{\text{d}S(t-\tau)}{\text{d}t} \text{d} \tau \\ & = \beta I(t) S(t) - \mu I(t)\,, \end{split} \tag{3.8} \end{equation}\]

together with the fact that the population, \(N\), is closed, \(S(t) + I(t) + R(t) = N\), we obtain the system of equations

\[\begin{equation} \left\{\begin{array}{l} \frac{\text{d}S(t)}{\text{d}t} = - \beta I(t) S(t) \\ \frac{\text{d}I(t)}{\text{d}t} = \beta I(t) S(t) - \mu I(t) \\ \frac{\text{d}R(t)}{\text{d}t} = \mu I(t) \end{array}\right. \tag{3.9} \end{equation}\]

which is the model that is usually introduced as the Kermack-McKendrick, even though we have seen that their original contribution was much more general [160].

Equation (3.9) is also often used to introduce epidemic models in the literature as it constitutes one of the most basic models. As we are considering that every individual can contact every other this model is also known as the homogeneous mixing model [145], [161]. However, it should be noted that sometimes a slightly different version of this set of equations is presented. Indeed, if we define the fraction of susceptible individuals in the population as \(s(t)\equiv S(t)/N\), and similarly with the others, note that the expression for the evolution of infected individuals is

\[\begin{equation} \frac{\text{d}i(t)}{\text{d}t} = \beta N i(t) s(t) - \mu i(t)\,. \tag{3.10} \end{equation}\]

Hence, the larger the population, the faster the spreading. This is known as the density dependent approach. However, we can formulate a very similar model in which we define the infectivity rate as \(\phi(\tau) = \beta/N\), so that

\[\begin{equation} \frac{\text{d}i(t)}{\text{d}t} = \beta i(t) s(t) - \mu i(t)\, \tag{3.11} \end{equation}\]

is independent of \(N\). This latter approach is called frequency dependent and is probably the most common one in the literature of epidemic processes on networks. Both approaches are valid and depend on the specific disease that is being modeled, see [162] for a deeper discussion of this matter.

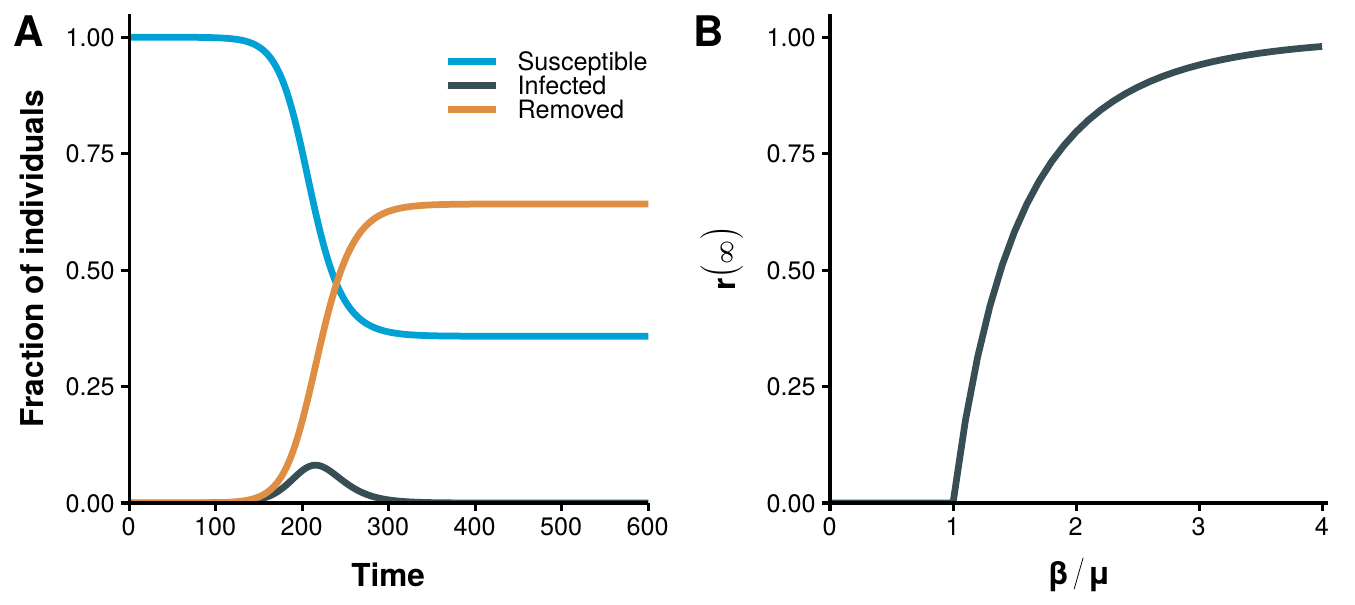

Despite the simplicity of this model, it provides two very powerful insights about disease dynamics. The first one is related to the reasons that account for the termination of an epidemic. Until the publication of this model, the most accepted explanations in medical circles were that an epidemic stopped either because all susceptible individuals had been removed or because during the course of the epidemic the virulence of the organism causing the disease decreased gradually [163]. Yet, this model shows that with a fixed virulence (\(\beta\)) it is possible to reach states in which the epidemic fades out even if there are still susceptible individuals. To demonstrate this, although some approximations can be done to show this behavior (there is no closed form solution of the model), for our purposes it suffices to show a numerical solution, figure 3.1A.

Figure 3.1: Basic results of the homogeneous mixing model. In panel A the evolution of the set of equations (3.9) as a function of time with \(\beta=0.16\) and \(\mu=0.10\) is shown. It is possible to reach a disease free state with a fraction of susceptible individuals larger than 0. In panel B the total fraction of recovered individuals in equilibrium conditions as a function of \(\beta/\mu\) is shown. For simplicity the frequency dependent approach has been used so that the threshold is 1.

At this point a clarification might be in order. During the introduction we said that Hamer had already showed in 1906 that it was possible for an epidemic to end despite the existence of large number of susceptible persons in the population. However, the difference resides in that Hamer proposal was based on data about measles, while this model is formulated without any specific disease in mind. Indeed, although clearly influenced by Hamer’s and Ross’ works, one of the great achievements of Kermack and McKendrick was to establish a formulation based only on mechanistic principles, regardless of the specific properties of the disease. Nonetheless, the most important result of this model has not been discussed yet, the epidemic threshold.

Suppose that in a completely susceptible population we introduce a tiny amount of infected individuals so that \(s(t=0) \equiv s_0 = 1 - \epsilon\) and \(i(t=0) \equiv i_0 = \epsilon\) with \(\epsilon\rightarrow 0\). If we linearize equation (3.10) around this point, we have

\[\begin{equation} \frac{\text{d}i(t)}{\text{d}t} \approx \beta N i_0 s_0 - \mu i_0\,, \tag{3.12} \end{equation}\]

which only grows if \(\beta N - \mu > 0\). Hence, there exists a minimum susceptible population at the initial state below which an epidemic cannot take place, the epidemic threshold:

\[\begin{equation} N_c > \frac{\mu}{\beta}\,. \tag{3.13} \end{equation}\]

Note that the formulation of this threshold can vary slightly according to the characteristics of the model. For instance, in the frequency dependent approach, equation (3.11), the epidemic threshold is defined by

\[\begin{equation} \frac{\beta}{\mu} > 1\,, \tag{3.14} \end{equation}\]

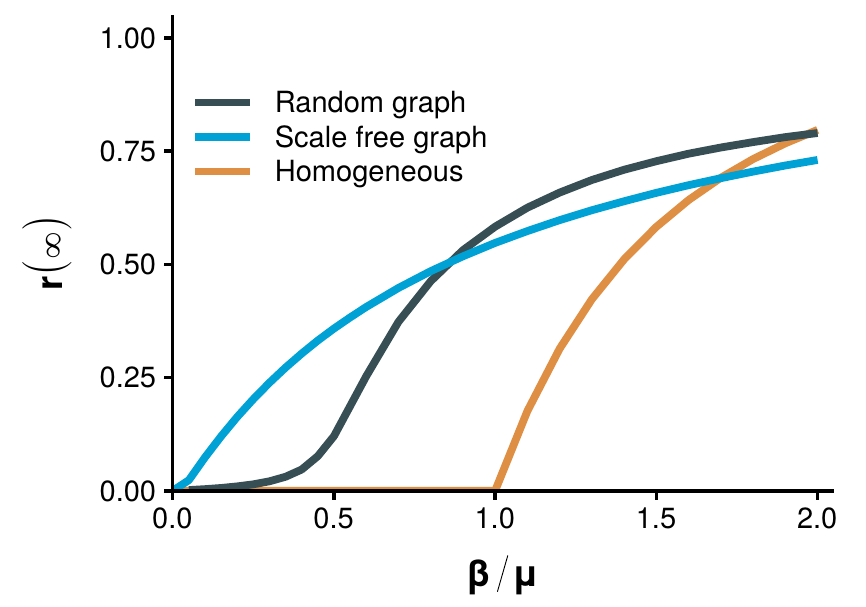

which is independent of \(N\). The existence of this threshold is numerically demonstrated in figure 3.1B, where the final fraction of recovered individuals as a function of the ratio \(\beta/\mu\) is shown. Regardless of the specific shape of the condition, the message is that it is possible to explain why an epidemic might not spread in a population of fully susceptible individuals. Moreover, it also provides a mechanism to fight diseases before they spread. Indeed, in equation (3.13) we have simply considered that \(S_0 = N\), but if we were able to immunize a fraction of the population so that \(S_0 < \mu/\beta\) then the epidemic could not take place. In other words, we would have conferred the population the herd immunity discussed in the beginning of the chapter.

3.1.1 Introducing the age compartment

Since the establishment of epidemiology as a science, a lot of attention has been devoted to the study of measles as its recurring patterns puzzled physicians and mathematicians alike. The distinguishing characteristic of measles epidemics is that they had a very regular temporal pattern with periodic outbreaks of the disease, as shown in figure 3.2. As this disease affects specially the children and also conveys permanent immunity to those who have suffered it, analyzing the time evolution over large time-scales to obtain the patterns required the inclusion of age in the models. Nevertheless, with the basic model that we have analyzed we can already propose a plausible explanation for this behavior. Indeed, we know that if the amount of susceptibles in the population is below a given threshold, the epidemic cannot take place. Thus, it seems reasonable to think that once the epidemic fades-out, there is a period in which there are not enough susceptibles for it to appear again. Yet, when new children are born, the amount of susceptibles will increase, possibly going above the threshold and therefore allowing a new outbreak.

A similar explanation was already proposed by Soper in 1929 [164], although it only matched the observations qualitatively, not quantitatively. It was Barlett who, in 1957, finally provided a quantitative explanation of the phenomenon [165]. Besides the details that we have already discussed, in his proposal he added a new factor that we have not mentioned yet. He proposed that the problem of previous models was that they were deterministic, an approximation that is only valid in very large populations. However, it was observed that the periodicity of measles not only depended on the size of the city, but it was specially so in small towns. In physical terms, we would say that there were finite size effects, tearing down assumption 6 (see section 3.1). Thus, he proposed to use a stochastic model for which he could not obtain a closed form solution, so he had to resort to an “electronic computer”. Nowadays the use of stochastic computational simulations are much more common than the deterministic approach. The reasons why this approach is more favorable are out of the scope of this thesis (see, for instance [140], [166]–[168] for a discussion) but we will leverage this opportunity to say that in the following sections we will mostly work with stochastic simulations, rather than deterministic approaches. Before concluding the discussion about Barlett’s paper, we find worth highlighting that it was presented during a meeting of the Royal Statistical Society, in 1956, after which a discussion followed. In said discussion, Norman T. J. Bailey said “One of the signs of the times is the use of an electronic computer to handle the Monte Carlo experiments. Provided they are not made an excuse for avoiding difficult mathematics, I think there is a great scope for such computers in biometrical work”. And indeed there was, as 25 years later Mr. Bailey was appointed Professor of Medical Informatics [169].

![Measles epidemics in New York from 1906 to 1948. This figure represents the number of reported cases of measles in the city of New York from 1906 to 1948 with a biweekly resolution. There are some gaps due to missing reports. Data obtained from [170].](images/Fig_chap3_homo_measles.png)

Figure 3.2: Measles epidemics in New York from 1906 to 1948. This figure represents the number of reported cases of measles in the city of New York from 1906 to 1948 with a biweekly resolution. There are some gaps due to missing reports. Data obtained from [170].

Returning to our discussion, it is not surprising, then, that McKendrick already introduced age in his models in 1926 [171], one year before the publication of the full model that we have already explored. However, to introduce age we will use a slightly more modern formulation that will simplify the analysis. In particular, we need to revisit assumption 4, i.e., individuals are only distinguishable by their health status.

Let us state that individuals can now be identified both by their health status and their age. Hence, we have to add more compartments to the model, one for each age group and health status combination. In other words, rather than having three compartments, \(S,I,R\), we now have 3 times the number of age brackets considered, i.e. \(S_a, I_a, R_a\) being \(a\) the age bracket the individuals belong to (see 2.6 for the definition of age bracket). Moreover, we will suppose that the disease dynamics is much faster than the demographic evolution of the population. The only thing left is to decide how to go from one compartment to another:

- For the rate of infectivity, we will define an auxiliary expression that will facilitate the discussion. By inspection of equation (3.9), we can define the force of infection [163] as

\[\begin{equation} \lambda(t) \equiv \phi(\tau) I(t) = \beta I(t)\,, \tag{3.15} \end{equation}\]

which does not depend on any characteristic of the individual. Hence, we can simply incorporate age by modifying the force of infection so that

\[\begin{equation} \lambda(t,a) = \sum_{a'} \phi(\tau,a,a') I_{a'}(t)\,. \tag{3.16} \end{equation}\]

This way, both the age of the individual that is getting infected (\(a\)) and the age of all other individuals (\(\sum_{a'}\)) are taken into account. Furthermore, we can separate \(\phi(\tau,a,a')\) into two components: one accounting for the rate of contacts between individuals of age \(a\) and \(a'\) and another one accounting for the likelihood that such contacts lead to an infection. Hence,

\[\begin{equation} \phi(\tau,a,a') \equiv C(a,a') \beta(a,a')\,. \tag{3.17} \end{equation}\]

Recalling section 2.6, the term \(C(a,a')\) can be obtained from the contact surveys that we have already studied. On the other hand, we will suppose that the likelihood of infection is independent of the age so that \(\beta(a,a') = \beta\).

- For the rate of recovery, we will assume that it is independent of the age of the individual, i.e. \(\mu(a) = \mu\).

Under these assumptions, the homogeneous mixing model with age dependent contacts reads \[\begin{equation} \left\{\begin{array}{l} \frac{\text{d}S_a(t)}{\text{d}t} = - \sum_{a'}\beta C(a,a') I_{a'}(t) S_a(t) \\ \frac{\text{d}I_a(t)}{\text{d}t} = \sum_{a'}\beta C(a,a') I_{a'}(t) S_a(t) - \mu I_a(t) \\ \frac{\text{d}R_a(t)}{\text{d}t} = \mu I_a(t) \end{array}\right. \tag{3.18} \end{equation}\]

Despite its simplicity, this model is still widely used today, specially in the context of metapopulations9 [132]. Even more, as in all compartmental models, it is straightforward to extend it to include more complex dynamics. For instance, we can add the exposed state so that individuals that get infected remain in a latent state for a certain amount of time before showing symptoms and being able to infect others. This model, known as the SEIR model, can be used to describe influenza dynamics [173]

\[\begin{equation} \left\{\begin{array}{l} \frac{\text{d}S_a(t)}{\text{d}t} = - \sum_{a'}\beta C(a,a') I_{a'}(t) S_a(t) \\ \frac{\text{d}E_a(t)}{\text{d}t} = \sum_{a'}\beta C(a,a') I_{a'}(t) S_a(t) - \sigma E_a(t)\\ \frac{\text{d}I_a(t)}{\text{d}t} = \sigma E_a(t) - \mu I_a(t) \\ \frac{\text{d}R_a(t)}{\text{d}t} = \mu I_a(t) \end{array}\right. \,. \tag{3.19} \end{equation}\]

The new parameter, \(\sigma\), accounts for the rate at which an individual from the latent state goes to the infectious state, in a similar fashion as \(\mu\) does for the transition from \(I\) to \(R\). This model will be the focus of the last part of this section.

3.1.2 Changing demographics

I call myself a Social Atom - a small speck on the surface of society.

“Memoirs of a social atom”, William E. Adams

As we discussed earlier, for a long period of time the developments in mathematical epidemiology were disconnected from data, at least until Anderson and May arrived to the field in the late 1980s. It is not so surprising, then, that even though age was incorporated into models since the beginning of the discipline, we had to wait until the late 1990s to get experimental data of age mixing patterns.

The first attempt to quantify the mixing behavior responsible for infections transmitted by respiratory droplets or close contact (which are the ones best suited to be studied with homogeneous mixing models) was the pioneering work by Edmunds et al. in 1997 [174]. Their results, however, can hardly be extrapolated as they only analyzed a population consisting of 62 individuals coming from two British universities. The first large-scale experiment to measure these patterns was conducted by Mossong et al. in 2008 [131]. In their study, they measured the age-dependent contact rates in eight European countries (Belgium, Finland, Germany, Great Britain, Italy, Luxembourg, Netherlands and Poland), as part of the European project Polymod, using contact diaries. In the next years other authors followed the route opened by Mossong et al. and measured the age-dependent social contacts of countries such as China [175], France [176], Japan [177], Kenya [178], Russia [179], Uganda [180] or Zimbabwe [181], as well as the Special Administrative Region of Hong Kong [182], greatly expanding the available empirical data.

These experiments provide us with the key ingredient required for the introduction of age compartments into the models, the age contact matrix, \(C\). There are, however, a couple of ways of defining this matrix that are equivalent under certain transformations. We define the matrix in extensive scale, \(C\), as the one in which each element \(C_{i,j}\) contains the total number of contacts between two age groups \(i\) and \(j\). It is trivial to see that given this definition there must be reciprocity in the system, i.e.,

\[\begin{equation} C_{i,j} = C_{j,i}\,. \tag{3.20} \end{equation}\]

A similar definition can be obtained if instead of accounting for all the contacts between two groups we want to capture the average number of contacts that a single individual of group \(i\) will have with individuals in group \(j\):

\[\begin{equation} M_{i,j} = \frac{C_{i,j}}{N_i}\,, \tag{3.21} \end{equation}\]

where \(N_i\) is the number of individuals in group \(i\). We call the matrix in this form the intensive scale. This is the usual format in which this matrix is given. In this case, reciprocity is fulfilled if

\[\begin{equation} M_{i,j} N_i = M_{j,i} N_j\,. \tag{3.22} \end{equation}\]

This last expression rises an interesting question. The reciprocity relation depends on the population in each age bracket, \(N_i\). Thus, if the matrix \(M\) was measured in year \(y\), we have that \(M_{i,j}(y) N_i(y) = M_{j,i}(y) N_j(y)\). However, if we want to use this matrix in a different year, that is, with a different demographic structure due to the inherent evolution of the population, reciprocity will no longer be fulfilled, i.e.

\[\begin{equation} M_{i,j}(y) N_i(y') \neq M_{j,i}(y) N_j(y')\,, \tag{3.23} \end{equation}\]

unless the population has not changed. This is a major problem because there are diseases whose temporal dynamics are comparable to the ones of the demographic evolution. For instance, Tuberculosis is a disease in which age is particularly important and the incubation period ranges from 1 to 30 years [183]. Hence, to properly forecast the evolution of Tuberculosis in a population it is strictly necessary to project somehow these age-contact matrices into the future [134]. Even for diseases that have much shorter dynamics, such as influenza, this is a relevant problem because given how costly these experiments are, it is unpractical to repeat them every few years to obtain updated matrices. As a consequence, if we simply want to study the impact of influenza this year, more than 10 years after the work by Massong et al., we need to devise a way to properly update them.

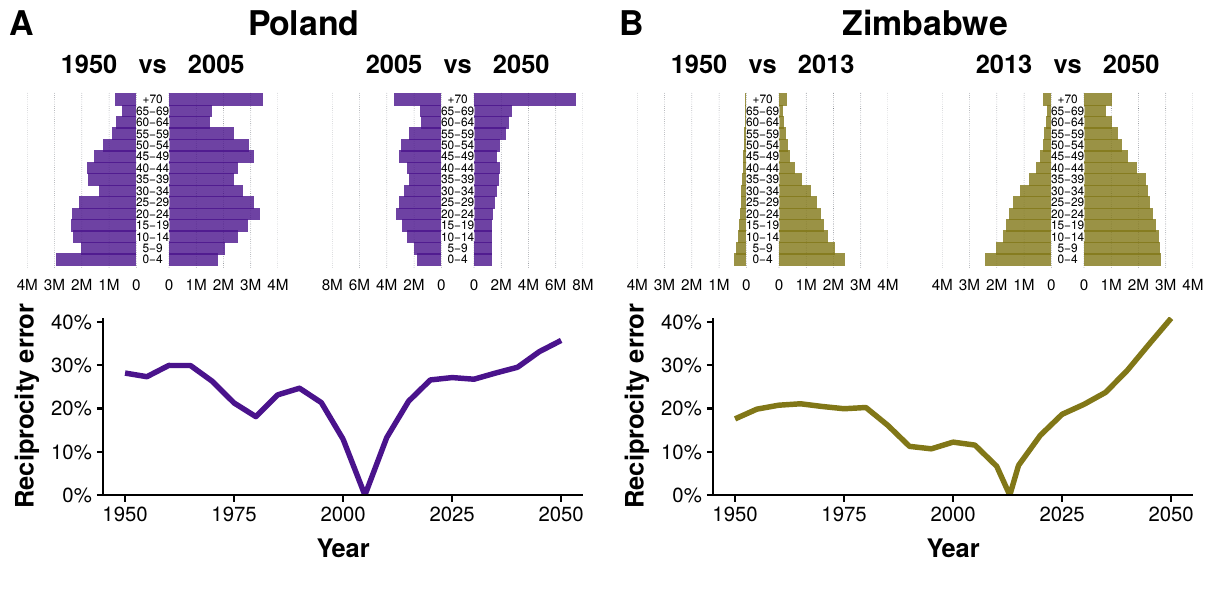

Figure 3.3 exemplifies, for Poland and Zimbabwe, the error we would make if we do not adapt \(M\) and blindly use it with demographic structures that are different than the original. We define the reciprocity error as

\[\begin{equation} E = \frac{\sum_{i,j>i} |C_{i,j}-C_{j,i}|}{0.5\cdot \sum_{i,j} C_{i,j}} = \frac{\sum_{i,j>i} | M_{i,j} N_i - M_{j,i} N_j |}{0.5 \cdot \sum_{i,j} M_{i,j} N_i}\,, \tag{3.24} \end{equation}\]

to quantify the fraction of links that are not reciprocal. The two countries under consideration have very different demographic patterns, both in the past and in the future, and yet we can see that the error is quite large in both of them.

Figure 3.3: Reciprocity error as a function of time in Poland and Zimbabwe. For each country, in the top plots the demographic structures of 1950 and 2050 are compared to the one existing when the contact matrices were measured. In the bottom plot the reciprocity error as a function of time is shown. For the matrix to be correct in different years the error should be 0, but that only happens in the year when the data was collected.

The problem is that both \(C_{i,j}\) and \(M_{i,j}\) implicitly contain information about the demographic structure of the population at the time they were measured. To solve this problem, we define the intrinsic connectivity matrix as

\[\begin{equation} \Gamma_{i,j} = M_{i,j} \frac{N}{N_j}\,. \tag{3.25} \end{equation}\]

This matrix corresponds, except for a global factor, to the contact pattern in a “rectangular” demography (a population structure where all age groups have the same density). Hence, it does not have any information about the demographic structure of the population.

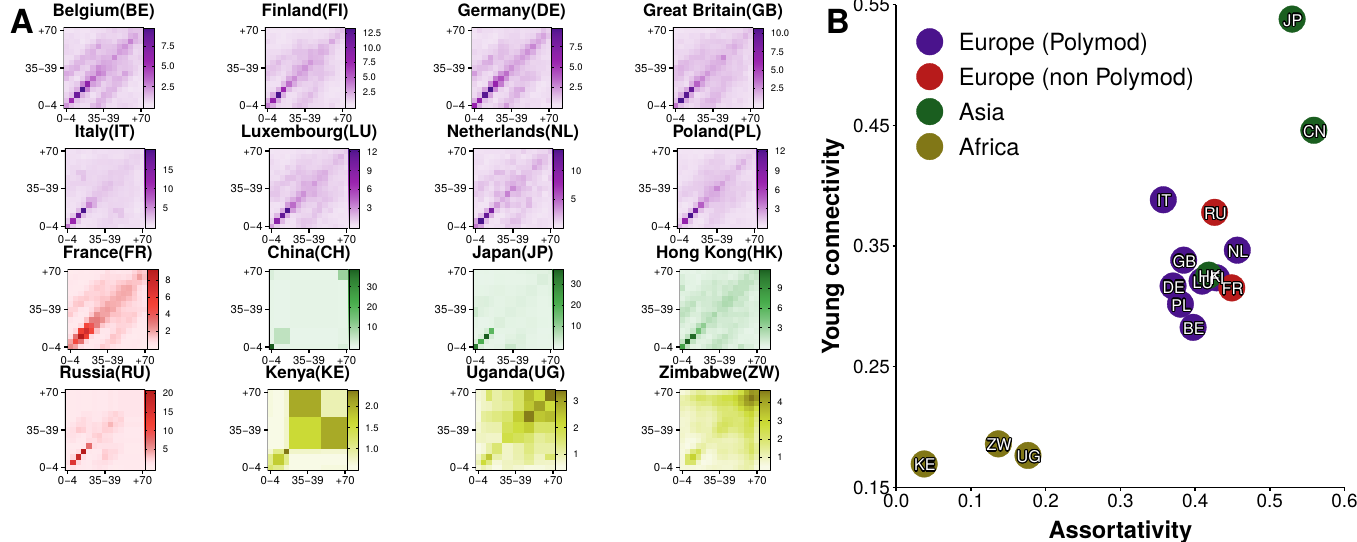

In figure 3.4A we show the instrinsic connectivity matrices for each of the 16 regions enumerated previously. Interestingly, the contact patterns are quite different from region to region. To facilitate the comparison, in figure 3.4B we plot the fraction of connectivity that corresponds to young individuals (less than 20 years old) as a function of the assortativity of each matrix as defined by Newman [73] (this quantity is an adaptation of the Pearson correlation coefficient so that it is equal to 1 if individuals tend to contact those who are like them, -1 in the opposite case and 0 if the pattern is completely uncorrelated). We can see that regions with similar demographic structures and culture tend to cluster together, although it is not possible to disentangle which is the precise cause leading to one pattern or the other.

Figure 3.4: Age contact matrices from 16 regions. A) Intrinsic connectivity matrix, \(\Gamma_{i,j}\), of each region. There is not a standard definition of age brackets and thus each study had its own definition. For comparison purposes, we have adapted the data to 15 age brackets: \([0,5),[5,10),\ldots,[65,70),+70\). B) Proportion of connectivity corresponding to individuals younger than 20 versus the assortativity coefficient of each matrix.

With this matrix we can now easily compute \(M\) at any other time, as long as we know the demographic structure of the population at that time:

\[\begin{equation} M_{i,j} (y') = \Gamma_{i,j} \frac{N_j(y')}{N(y')} = M_{i,j}(y) \frac{N(y)N_j(y')}{N_j(y)N(y')}\,. \tag{3.26} \end{equation}\]

In our case, we will obtain this data from the UN population division database, which contains information of both the past demographic structures and their projections to 2050 for the whole world [184].

We conclude this section addressing how this correction impacts disease modeling. To this end, we simulate the spreading of an influenza-like disease both with and without corrections on the matrix. We choose influenza because it is a short-cycle disease so that we can assume that the population structure is constant during each simulated outbreak. Besides, it can be effectively modeled using the SEIR model presented in 3.1.1.

To parameterize the model we use the values of an influenza outbreak that took place in Belgium in the season 2008/2009 [132]. Thus, individuals can catch the disease with transmissibility rate \(\beta\) per-contact with an infectious individual. The value of \(\beta\) is determined in each simulation so that the basic reproductive number is equal to \(2.12\) using the next generation approach (this procedure will be explained in more detail in section 3.2) [132], [185]. Once infected, individuals remain on a latency state for \(\sigma^{-1} = 1.1\) days on average. Then, they become infectious for \(\mu^{-1} = 3\) days on average, period when they can transmit the infection to susceptible individuals. After that, they recover and become immune to the disease. We use a discrete and stochastic model with the population divided into 15 age classes, whose mixing is given by the age contact matrix \(M\). To sum up:

- The probability of an individual belonging to age group \(i\) to get infected is

\[\begin{equation} p_{S\rightarrow E} = \beta \sum_j \frac{M_{i,j}}{N_j} I_j\,. \tag{3.27} \end{equation}\]

- Once in the latent state, the probability of entering the infected state is

\[\begin{equation} p_{E\rightarrow I} = \sigma\,. \tag{3.28} \end{equation}\]

- Finally, an infected individual will recover with probability

\[\begin{equation} p_{I\rightarrow R} = \mu\,. \tag{3.29} \end{equation}\]

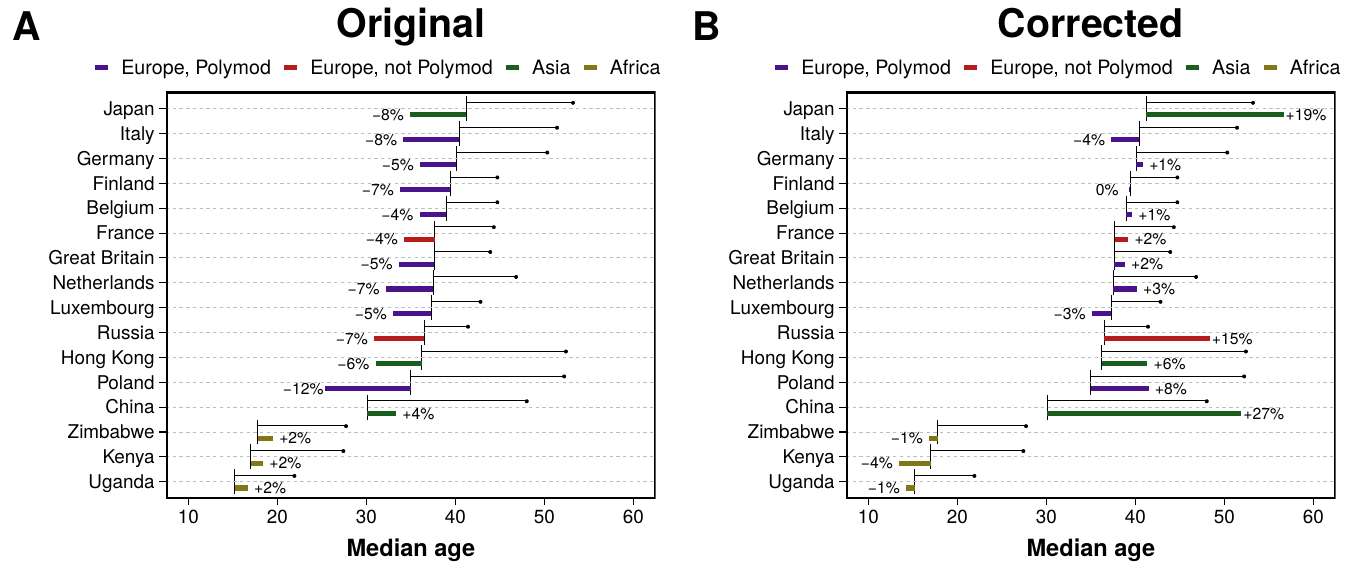

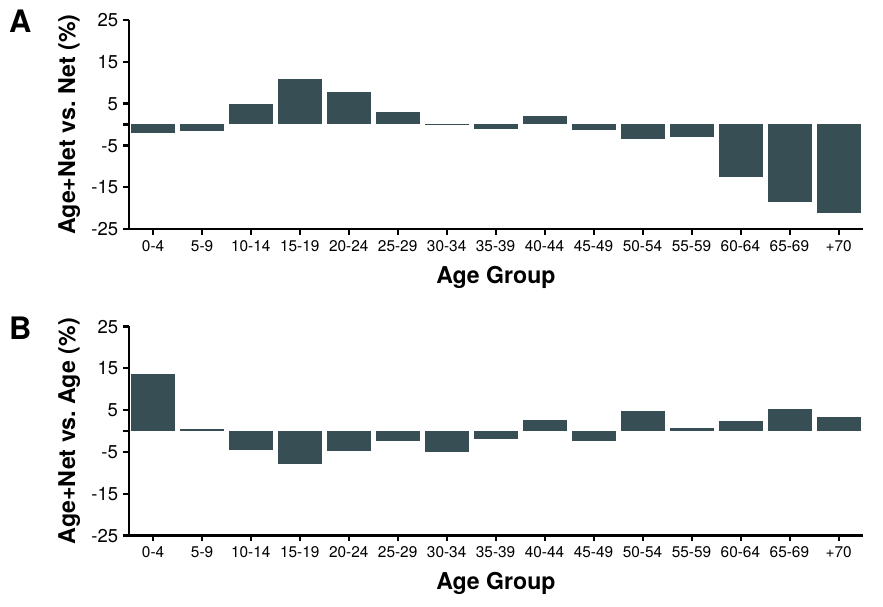

Under these conditions, we compute the predicted size of the epidemic, i.e., \(R(t\rightarrow \infty)\), in years 2000 and 2050. In figure 3.5 we present the results. In particular, in A we show the difference between the predicted size of the epidemic in 2050 versus the one in 2000 using the same \(M\) matrix in both years. In almost all countries the final size of the epidemic is smaller in 2050, except for China and the African countries in which it increases. However, in B we repeat the analysis but using the adapted values of \(M(y')\) obtained using (3.26). In this case, in general, the situation is reversed. Most countries have larger epidemics, except for the African ones. Even more, in countries such as China and Japan the difference is quite large, close to 20%.

Figure 3.5: Predictions of influenza incidence in 2050 with demographic corrections. In both plots the black horizontal line starts at the median age of each region in the year 2000 and ends with a bullet point with the predicted value in 2050. Color bars denote the relative variation of incidence over the same period. In A the predictions are computed using the original contact matrices collected from the surveys. In B the proposed demographic corrections are applied to the matrices.

Summarizing, to create more realistic models we need to incorporate empirically measured data. However, blindly using data without thinking whether it can be applied to the specific system we are studying is not adequate. In the particular case of social mixing matrices, we have seen that even if we keep studying the same country, just moving a few years away from the moment in which the experiment took place dramatically affects the reciprocity of the contacts. This, in turn, leads to important differences in the global incidence for influenza-like diseases, as we have shown in our analysis of the SEIR model. Even more, since there are different intrinsic connectivity patterns across countries, it is possible that there exists a time evolution of this quantity. Indeed, if we believe that the intrinsic pattern is a consequence of the culture of the country, it seems logical to think that an evolving culture will also have evolving intrinsic connectivity patterns. Although predicting how society will change in the future is currently impossible, this should be taken into account as a limitation in any forecast for which heterogeneity in social mixing is a key element.

3.2 The basic reproduction number

One of the cornerstones of modern epidemiology is the basic reproduction number, \(R_0\), defined as the expected number of individuals infected by a single infected person during her entire infectious period in a population which is entirely susceptible. From this definition, it is clear that if \(R_0<1\), then, each infected individual will produce, on average, less than one infection. Therefore, the disease will not be able to be sustained in the population. Conversely, if \(R_0>1\) the disease will be able to propagate to a macroscopic fraction of the population. Hence, this simple dimensionless quantity is informing us of three key aspects of a disease: (1) whether the disease will be able to invade the population, at least initially; (2) a way to determine which control measures, and at what magnitude, would be the most effective, i.e., which ones will reduce \(R_0\) below 1; (3) to gauge the risk of an epidemic in emerging infectious diseases [186].

Interestingly, despite its importance, this quantity was not originated in epidemiology. The concept of \(R_0\), and its notation, was formalized by Dublin and Lotka, in 1925, in the context of demography10 [188]. The similitude of the concept in both fields is obvious, in one it measures the number of new infections per infected while in the other the number of births per female. Yet, in epidemiology the concept was mostly unknown until Anderson and May popularized it 60 years later in the Dahlem conference [189] (see [190] for a nice historical discussion on why it took so long for this concept to mature in epidemiology).

It might be enlightening to introduce the mathematical definition from the point of view of demography. Consider a large population. Let \(F_d(a)\) be the survival function, i.e., the probability for a new-born individual to survive at least to age \(a\), and let \(b(a)\) denote the average number of offspring that an individual will produce per unit of time at age \(a\). The function \(n(a) \equiv b(a) F_d(a)\) is called the reproduction function. Hence, the expected future offspring of a new-born individual, \(R_0\), is [191]

\[\begin{equation} R^{demo}_0 \equiv \int_0^\infty n(a) \text{d} a = \int_0^\infty b(a) F_d(a) \text{d}a\,. \tag{3.30} \end{equation}\]

The translation of this definition to epidemiology is straightforward. First, note that the reproduction function at age \(a\) is equivalent to the expected infectivity of an individual who was infected \(\tau\) units of time ago, \(A(\tau)\) (see equation (3.4)). There is, however, one crucial difference. While in demography it is possible to “create” new individuals regardless of the size of the rest of the population, in epidemiology the creation of new individuals depends both on the infectivity and on the amount of susceptible individuals in the population. Hence,

\[\begin{equation} R_0(\eta) \equiv \int_\Omega S(\xi) \int_0^\infty A(\tau,\xi,\eta)\text{d}\tau \text{d}\xi\,. \tag{3.31} \end{equation}\]

This rather cryptic expression is the most general definition of this quantity [192], although we will see in a moment simpler ones. The expression should be read as follows: the value of \(R_0\) for individuals in an infectious state \(\eta\) is equal to the sum of all individuals in a susceptible state characterized by \(\xi\), of size \(\Omega\), times the infectivity of individuals in said state \(\eta\) who where infected \(\tau\) steps ago and can infect individuals in state \(\xi\).

In the particular case of the SIR model under the density dependent approach, equation (3.9), the basic reproduction number is simply

\[\begin{equation} \begin{split} R_0 & = S_0 \int_0^\infty A(\tau) \text{d}\tau = S_0 \int_0^\infty \beta e^{-\mu \tau} \text{d}\tau \\ & = \frac{\beta S_0}{\mu}\,, \end{split} \tag{3.32} \end{equation}\]

where \(S_0\) is the number of susceptible individuals at the beginning of the infection, which in the absence of immunized individuals is equal to \(N\). Recall that the linear stability analysis of the SIR model (3.12) yields,

\[\begin{equation} i(t) = i_0 e^{\beta N- \mu}\,, \tag{3.33} \end{equation}\]

which only grows if \(\beta N > \mu\). In other words, \(R_0\) defines precisely the epidemic threshold that we found in the previous section, i.e.,

\[\begin{equation} R_0 = \frac{\beta N}{\mu} > 1\,, \tag{3.34} \end{equation}\]

as heuristically discussed at the beginning of this section. In the frequency dependent approach, which is more common in the network science literature as we shall see in 3.3, the basic reproduction number11 is

\[\begin{equation} R_0 = \frac{\beta}{\mu} > 1\,. \tag{3.35} \end{equation}\]

To obtain an explicit expression for \(R_0\) with more complex compartmental models, an alternative approach to linear stability analysis is the next generation matrix as proposed by Diekmann in 1990 [192] and further elaborated by van den Driessche and Watmough in 2002 [185]. Briefly, the idea is to study the stability of the disease free state, \(x_0\). To do so, we restrict the model to those compartments with infected individuals and separate the evolution due to new individuals getting infected, \(\mathcal{F}\), and the transitions resulting for any other reason,

\[\begin{equation} \frac{\text{d}x_i(t)}{\text{d}t} = \mathcal{F}_i(x) - \mathcal{V}_i(x)\,, \tag{3.36} \end{equation}\]

where \(x = (x_1,\ldots,x_m)\) denotes the \(m\) infected states in the model. If we now define

\[\begin{equation} F = \left[\frac{\partial \mathcal{F}_i(x_0)}{\partial x_j}\right] \text{~~and~~} V = \left[\frac{\partial \mathcal{V}_i(x_0)}{\partial x_j}\right]\,, \tag{3.37} \end{equation}\]

the next generation matrix is \(FV^{-1}\) and the basic reproductive number can be obtained as

\[\begin{equation} R_0 = \rho(FV^{-1})\, \tag{3.38} \end{equation}\]

where \(\rho\) denotes the spectral radius [193]. In particular, for the model considered in section 3.1.2, the next generation matrix reads

\[\begin{equation} K_{i,j} = \frac{\beta}{\mu} \frac{M_{i,j}}{N_j}\,. \tag{3.39} \end{equation}\]

This expression can be used to ensure that, regardless of the values of \(M_{i,j}\) and \(N_j\), the starting point of the dynamics is the same. For this reason, when we wanted to address the differences in incidence consequence of the changing demographics, we fitted \(\beta\) so that the spectral radius of (3.39) was always \(R_0 = 2.12\).

It is worth pointing out that \(R_0\) clearly depends on the model we choose for the dynamics. As a consequence, even though its epidemiological definition is completely independent from models (number of secondary infections per infected individual in a fully susceptible population), its mathematical formulation is not univocal. Ideally, if in a disease outbreak we knew who infected whom, we would be able to obtain the exact value of \(R_0\). In reality, however, this information is seldom available. Hence, to compute it, one often relies on aggregated quantities, such as \(\beta\) and \(\mu\) in equation (3.35). The problem is that if we assume that a disease can be modeled within a specific framework, we cannot directly compare the value obtained for \(R_0\) with the ones measured for other diseases unless the exact same model has been used to obtain it. This is one of the observations that will motivate our work, which we will describe in section 3.2.3.

3.2.1 Measuring \(\mathbf{R_0}\)

Measuring \(R_0\) is not an easy task, specially in the case of emergent diseases for which fast forecasts are required. An accurate estimation of its value is crucial to planning for the control of an infection, but usually the only available information about the transmissibility of a new infectious disease is restricted to the daily count of new cases. Fortunately, it is possible, under certain conditions, to obtain an expression for \(R_0\) as a function of that data.

Following [194], we will assume that in the beginning of a disease outbreak the growth of the number of infected individuals is exponential. Hence, the number of new infected individuals at time \(t\) will be equal to the number of new infected individuals \(\tau\) time units ago, multiplied by the exponential growth,

\[\begin{equation} \frac{\text{d}S(t)}{\text{d}t} = \frac{\text{d}S(t-\tau)}{\text{d}t} e^{r\tau}\, \tag{3.40} \end{equation}\]

where \(r\) denotes the growth rate. Inserting this expression in (3.1) with \(t\rightarrow 0\),

\[\begin{equation} \frac{\text{d}S(t)}{\text{d}t} = S(t=0) \int_0^\infty A(\tau) \frac{\text{d}S(t)}{\text{d}t} e^{-r\tau} \text{d}\tau \Rightarrow 1 = S_0 \int_0^\infty A(\tau) e^{-r\tau} \text{d}\tau \tag{3.41} \end{equation}\]

At this point it might be enlightening to return once again to the demographic simile. In equation (3.30) we saw that the total number of offspring of a person could be obtained integrating \(n(a)\) (rate of reproduction at age \(a\)) over the whole lifespan of the individual. Thus, we can define the distribution of the age a person has when she has a child as

\[\begin{equation} g'(a) = \frac{n(a)}{\int_0^\infty n(a) \text{d} a } = \frac{n(a)}{R_0^{demo}}\,. \tag{3.42} \end{equation}\]

If we take the “age” of an infection to be the time since the infection, we can define an analogous quantity in epidemiology,

\[\begin{equation} g(\tau) = \frac{S_0 A(\tau)}{R_0}\,, \tag{3.43} \end{equation}\]

called generation interval distribution. In this case this distribution is the probability distribution function for the time from infection of an individual to the infection of a secondary case by that individual. Going back to (3.41) we now have

\[\begin{equation} \frac{1}{R_0} = \int_0^\infty g(\tau) e^{-r\tau} \text{d}\tau\,. \tag{3.44} \end{equation}\]

According to this last expression, the shape of the generation interval distribution determines the relation between the basic reproduction number \(R_0\) and the growth rate \(r\). In all the models explored so far, we assumed that both the rate of infection \(\beta\) and the rate of leaving the infectious stage \(\mu\) were constant. Hence, it follows that the duration of a generation interval is specified as an exponential distribution with mean \(Tg = 1/\mu\). Under these assumptions the basic reproduction number is then

\[\begin{equation} \begin{split} R_0 & = \left( \int_0^\infty \mu e^{-\mu\tau} e^{-r\tau} \text{d} \tau\right)^{-1} = \left( \frac{\mu}{r+\mu} \right)^{-1} \\ & = 1 + rTg \,. \end{split} \tag{3.45} \end{equation}\]

This relation between the growth rate and the generation time was already proposed by Dietz in 1976 [195], although only in the specific case of the SIR model. Equation (3.44), however, allows for the calculation of \(R_0\) in more complex scenarios, such as non constant \(\mu\) [194]. Despite its limitations, this expression is widely used in the literature due to its simplicity. Indeed, \(Tg\) is often considered to be simply the inverse of the recovery rate, which is relatively easy to measure. Thus, \(r\) can be obtained by fitting a straight line to the cumulative number of infections as a function of time, see (3.40).

There are, however, several problems with this procedure. First, we stated that the exponential growth is valid during the early phase of an outbreak, but there is no way to know how long is that in general. As a consequence, when one fits a straight line to the data, some heuristics have to be used to determine which points to use. Even more, if the dynamics is really fast there might be just a few valid points. Given the stochasticity of the process, this might lead to poor estimates of the growth rate.

Besides, there are some caveats on the exponential growth assumption. For instance, it has been observed that for some diseases such as AIDS/HIV the early growth is sub-exponential [196]. Likelihood based methods in which the early exponential growth is not needed were thus proposed [197], [198]. But even for diseases in which it might be a good approximation, there is the problem of susceptible depletion. Indeed, if the population is infinite, each infected individual will be always able to reach an infinite amount of susceptibles. But this is not true in real situations, forbidding the exponential growth to be sustained for too long. Hence, methods that account for this depletion during the initial phase had also to be developed [199], [200].

It should be clear by now that despite the widespread use of this parameter, it is far from being perfectly understood, specially in the presence of real world data. Yet, we can go one step further and generalize the definition of \(R_0\) to the effective reproduction number, \(R(t)\).

3.2.2 The effective reproduction number

The effective reproduction number, \(R(t)\), is defined as the average number of secondary cases generated by an infectious individual at time \(t\). Hence, we are relaxing the hypothesis of a fully susceptible population that we gave at the beginning of section 3.2.

This parameter is obviously better suited for studying the impact of protection measures taken after the detection of an epidemic, as it can be defined at any time. If \(R(t)<1\), it seems reasonable to say that the epidemic is in decline and may be regarded as being under control at time \(t\). Furthermore, in section 3.1.1 we saw that diseases such as measles have periodic outbreaks and also convey immunity to those who have suffered it. Thus, when a new outbreak starts the population is not completely susceptible, invalidating one of the conditions in the definition of \(R_0\) [201].

To provide a mathematical definition of \(R(t)\) [202], we can revisit equation (3.32) and define

\[\begin{equation} R(t) = S(t) \int_0^\infty A(\tau)\text{d}\tau\,, \tag{3.46} \end{equation}\]

which leads to

\[\begin{equation} R(t) = \frac{S(t)}{S_0} R_0\,. \tag{3.47} \end{equation}\]

According to this expression, in a closed population, the value of \(R(t)\) should monotonically decrease. As expected, this has been observed in computational simulations [201], even in the case of sub-exponential growth [203]. However, this is not always the case if one tries to obtain \(R(t)\) from real data [204]. In particular, Walling and Teunis studied the severe acute respiratory syndrome (SARS) epidemic from 2003 and observed several local maxima in the evolution of the effective reproduction number, which they attributed to “super-spread events” in which certain individuals infected unusually large numbers of secondary cases [205]. This was also found in other diseases, signaling that the whole complexity of real systems cannot be completely captured with simple homogeneous models [206]. For this reason, the next section will be devoted to our contribution in the study of the effect that more heterogeneous population distributions have on the reproduction numbers.

3.2.3 Measurability of the epidemic reproduction number

Of course, there had been plenty of diseases, long before humans had been around. But humans had definitely created Pestilence. They had a genius for crowding together, for poking around in jungles, for setting the midden so handily next to the well. Pestilence was, therefore, part human, with all that this entailed.

“Thief of time”, Terry Pratchett

The fundamental role that households play in the spreading of epidemics has been acknowledged for a long time. Early estimations of influenza spreading already showed that the probability of getting infected from someone living in your household or someone from the community were quite different. Even more, it was shown that children were twice more likely to get the infection from the community than adults, signaling that the places that children visit and the own heterogeneity of household members are fundamental in the disease dynamics [207]. In a more recent study, data from a real epidemic in a small semi-rural community was analyzed, with schools added explicitly into the picture. As expected, it was observed that their role is key in the spreading of the disease. But, even more interesting, the authors calculated a reproduction number for each population structure and found it to be smaller or of the order of 1, meaning that for an outbreak to be sustained a complex interplay between those structures must take place [208].

In order to introduce the concept of households into the models analyzed so far, we need to revisit once again the assumption of full homogeneity. Most theoretical approaches in this line, since the seminal work of Ball et al. in 1997 [209], have focused on what is known as models with two levels of mixing. In these models, a local homogeneous mixing in small environments (such as households) is set over a background homogeneous mixing of the whole population. This can be further extended by adding other types of local interactions, such as schools or workplaces. An individual can thus belong at the same time to two or more local groups. For this reason, they are also known as overlapping group models [210]. This allows for the definition of several basic reproduction numbers, one for the community and the rest for local interactions, which in turn can be used to devise efficient vaccination strategies [211], [212]. Other studies have also proposed that the generation time can differ from within households and the community [213].

However, theoretical studies have been mostly focused on the early phase of the epidemics because it is more mathematically tractable. Yet, we have seen in the previous section that \(R(t)\) can provide very important insights to understand the dynamics of real diseases. For this reason, statistical methods have been developed to analyze \(R(t)\) [214], [215]. Unfortunately, for these methods, disentangling the role that each structure of the system plays is challenging due to the lack of microscale data on human contact patterns for large populations. Note also that due to the typically small size of households, stochastic effects are highly important.

In this work our objective is to shed some light into the mechanisms behind disease dynamics in heterogeneous populations. To do so, we study the evolution of \(R(t)\) and \(Tg\) with data-driven stochastic micro-simulations of an influenza-like outbreak on a highly detailed synthetic population. The term “micro” refers to the fact that we will keep track of each individual in the population, allowing us to reconstruct the entire transmission chain. The great advantage of this method is that it allows for the computation of \(R(t)\) from its own epidemiological definition, without requiring any mathematical approximation.

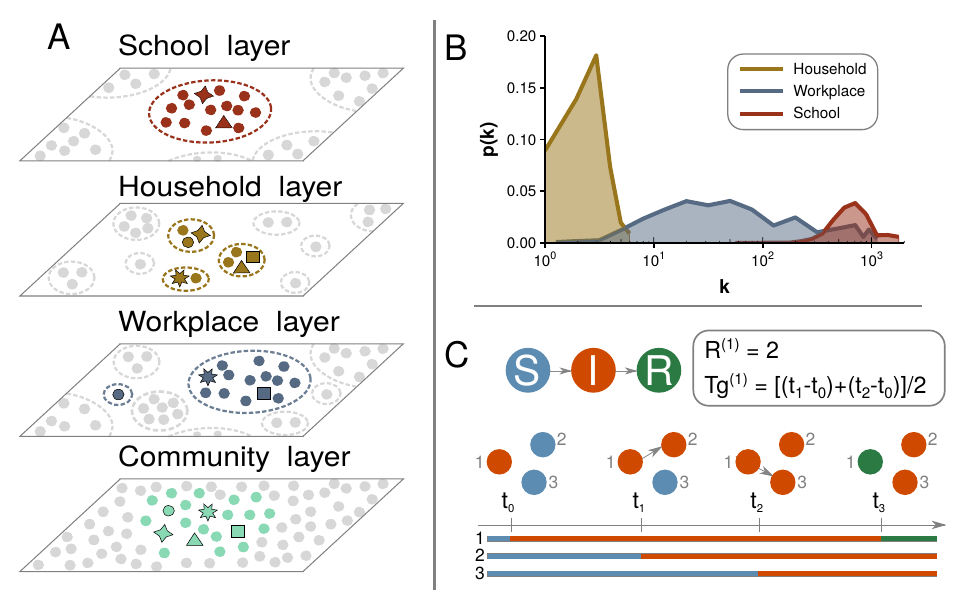

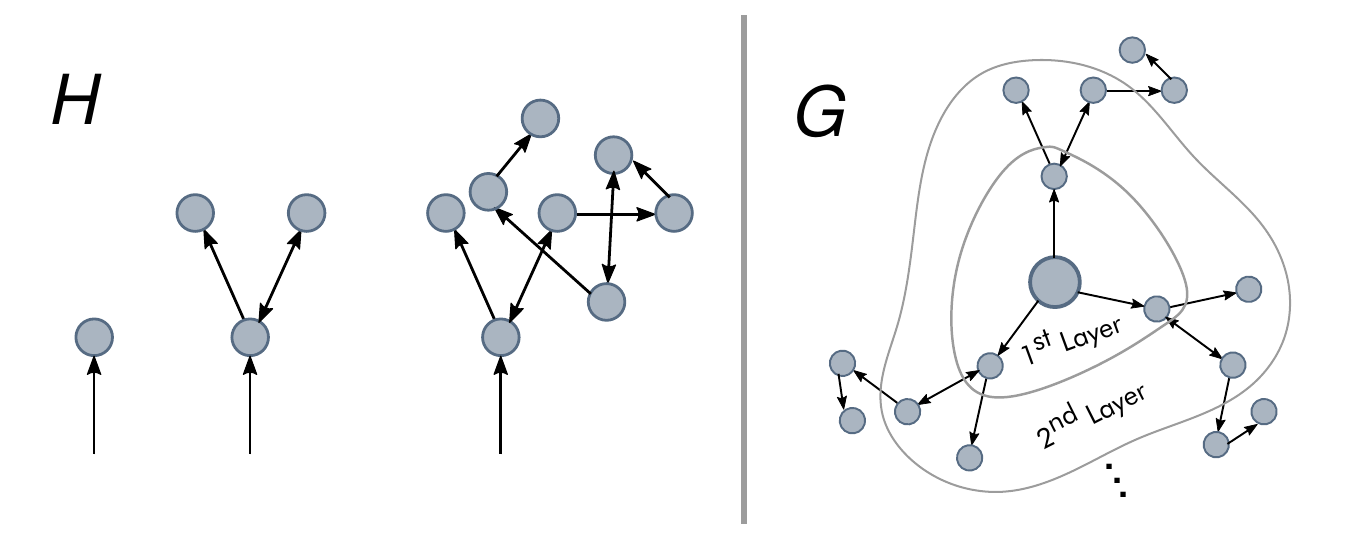

Our synthetic population is composed by 500,000 agents, representing a subset of the Italian population. This population model, developed by Fumanelli et al. [216], divides the system into the four settings where influenza transmission occurs, namely households, schools, workplaces and the general community [217]. Henceforth we will refer to these settings as layers for the similarity of this construction to the multilayer networks we saw in section 2.1.312; a visualization of the model is shown in 3.6A. The household layer is composed by \(n_H\) disconnected components, each one representing one household. The amount of individuals inside each household is determined by sampling from the actual Italian household size distribution, as well as their age. Then, by sampling from the multinomial distribution of schooling and employment rates by age, each individual might be also assigned to a school or workplace. Both the number and size of workplaces and schools is also sampled from the actual Italian distribution. As in the household layer, each of the \(n_S\) schools and \(n_W\) workplaces are disconnected from the rest in their respective layers. Lastly, all individuals are allowed to interact with each other in the community layer, encapsulating the background global interaction. To highlight the heterogeneity of the system, in figure 3.6B the size distribution of the places each individual belongs to is shown. Note that while most households contain 2-3 individuals and most schools are close to 1,000 students, workplaces cover a much wider range of sizes.

Figure 3.6: Model structure of a synthetic population organized in schools, households and workplaces. A) Visualization of the overlapping system, with individuals being able to interact locally in multiple contexts. B) Distribution of the structure size each individual belongs to. C) Illustration of the transmission process with an example of how to calculate the reproduction number and generation interval.

The influenza-like transmission dynamics are defined through the susceptible, infected, removed (SIR) compartmental model that we have been analyzing under diverse assumptions. We simulate the transmission dynamics using a stochastic process for each individual, keeping track of where she contracted the disease, who is in contact with and so on. In order to resemble an influenza-like disease, the local spreading power in each layer is calibrated in such a way that the fraction of cases in the four layers is in agreement with literature values (namely, 30% of all influenza infections are linked to transmission occurring in the household setting, 18% in schools, 19% in workplaces and 33% in the community [218]). Hence, the probability that individual \(j\) infects \(i\) in layer \(l\) is

\[\begin{equation} \beta = w_l\,, \tag{3.48} \end{equation}\]

as long as \(j\) is infected, \(i\) is susceptible and both belong to the same component in layer \(l\). Moreover, we set the values of \(w_l\) such that the basic reproduction number is \(R_0=1.3\) [219]. Finally, the removal probability, \(\mu\), is set so that the removal time is 3 days [220].

The epidemic starts with a fully susceptible population in which we set just one individual as infected. Thanks to the microscopic detail of the model, we can thus compute the basic reproduction number directly as the number of infections that said first individual produces before recovery. Its counterpart over time, the effective reproduction number, \(R(t)\), is measured using the average number of secondary cases generated by an infectious individual at time \(t\). Similarly, we also define the effective reproduction number in layer \(l\), \(R_l(t)\), as the average number of secondary infections generated by a typical infectious individual in layer \(l\):

\[\begin{equation} R_l(t) = \frac{\sum_{i\in \mathcal{I}(t)} D_l(i)}{|\mathcal{I}(t)|}\,, \tag{3.49} \end{equation}\]

where \(\mathcal{I}(t)\) represents the set of infectious individuals that acquired the infection at time \(t\) and \(D_l(i)\) the number of infections generated by infectious node \(i\) in layer \(l\) with \(l \in L=\{H,S,W,C\}\). With this expression we can obtain the overall reproductive number as

\[\begin{equation} R(t) = \sum_{l\in L} R_l(t)\,. \tag{3.50} \end{equation}\]

The generation time \(Tg\) is defined as the average time interval between the infection time of infectors and their infectees. Hence, analogously to the reproduction number, we define the generation time in layer \(l\) as

\[\begin{equation} {Tg}_l = \frac{\sum_{i\in \mathcal{I}(t)} \sum_{j \in \mathcal{I}'_l(i)} (\tau(j)-t)}{\sum_{i \in \mathcal{I}(t)} D_l(i)}\,, \tag{3.51} \end{equation}\]

where \(\mathcal{I}'_l(i)\) denotes the set of individuals that \(i\) infected in layer \(l\) and \(\tau(j)\) is the time when node \(j\) acquired the infection. Therefore, the overall generation time \(Tg(t)\) reads

\[\begin{equation} Tg(t) = \frac{\sum_{l\in L}\sum_{i \in \mathcal{I}(t)} \sum_{j \in \mathcal{I}'_l(i)} (\tau(j)-t)}{\sum_{l\in L}\sum_{i\in \mathcal{I}(t)} D_l(i)}\,. \tag{3.52} \end{equation}\]

A schematic illustration of the transmission dynamics is shown in figure 3.6C. In that case, individual 1 gets infected at \(t=t_0\), while individuals 2 and 3 are still susceptible. During the course of her disease individual 1 infects individual 2 at \(t=t_1\) and individual 3 at \(t=t_2\) before finally getting recovered at \(t=t_3\). Thus, her reproduction number is equal to 2 and her generation time is \(1.5\), supposing that \(t_{i+1} - t_i = 1\). In fact, in our simulations we will set \(\Delta t = 1\) day. Due to the stochasticity of the process, each realization might result in an outbreak of different length. Hence, the time evolution of each simulation is aligned so that the peak of the epidemic is exactly at \(t=0\). The results for the reproduction number and generation time are shown in figure 3.7.

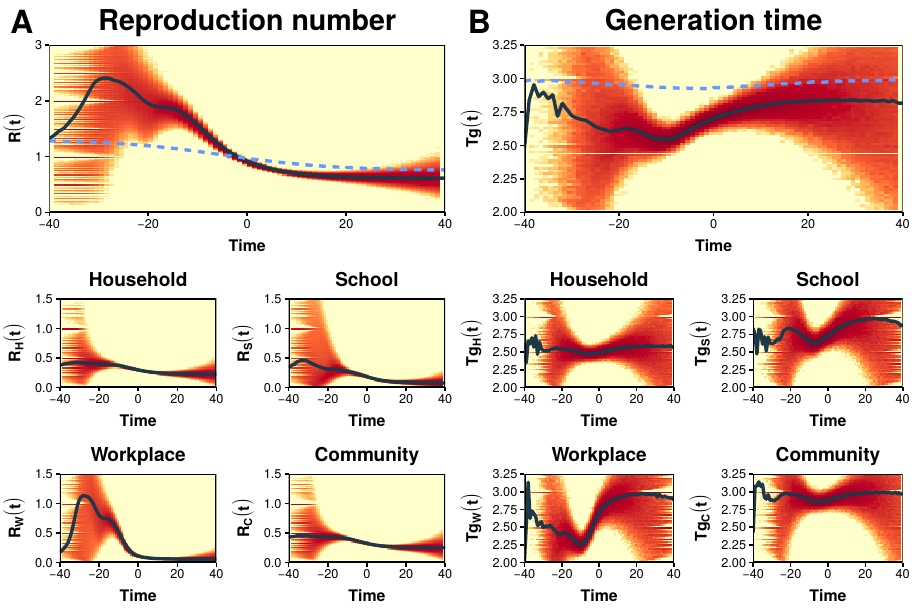

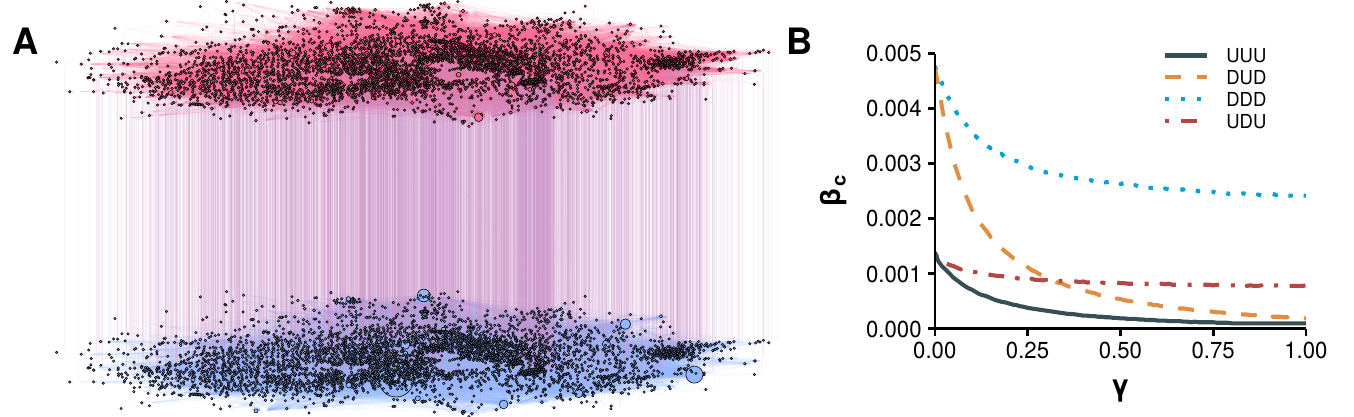

Figure 3.7: Fundamental epidemiological indicators. A) Top: mean \(R(t)\) of data-driven model (solid line) compared to the solution under a completely homogeneous population (dashed line). The colored area shows the density distribution of \(R(t)\) values obtained in single realizations of the model. Bottom: the reproductive number is broken down in the four layers. B) As A but for the generation time. In all cases the simulations have been aligned at the peak of the epidemic.

We find that \(R(t)\) increases over time in the early phase of the epidemic, starting from \(R_0 = 1.3\) to a peak of about \(2.5\) (figure 3.7A). In contrast, in the homogeneous model (dashed line), which lacks the typical structures of human populations, \(R(t)\) is nearly constant in the early epidemic phase and then rapidly declines before the epidemic peak (\(t=0\)), as predicted by the classical theory. The non-constant phase of \(R(t)\) implies that \(R_0\) loses its meaning as a fundamental indicator in favor of \(R(t)\). In figure 3.7B we show an analogous analysis of the measured generation time in the data-driven model. In this case, we find that \(Tg\) is considerably shorter than the infectious period (\(3\) days), with a more marked shortening near the epidemic peak. Once again, in the homogeneous model (dashed line) the behavior predicted by the classical theory is recovered.

A closer look at the transmission process in each layer helps to understand the origin of the deviations from classical theory. Specifically, we see that \(R(t)\) tends to peak in the workplace layer, and to some extent also in the school layer. In the community layer, on the other hand, the behavior is much closer to what is expected in a classical homogeneous population. We also find that \(Tg\) is remarkably shorter in the household layer than in all other layers. This could simply be due to a depletion of susceptibles. To illustrate this, suppose that an infected individual in a household of size 3 infected one of the other two. Then, during the next time step both will compete to infect the last susceptible, something that does not happen in large populations. This would lead to a shorter generation time simply because she is unable to infect other members, even if she is still infected. This evidence calls for considering within household competition effects when analyzing empirical data of the generation time.

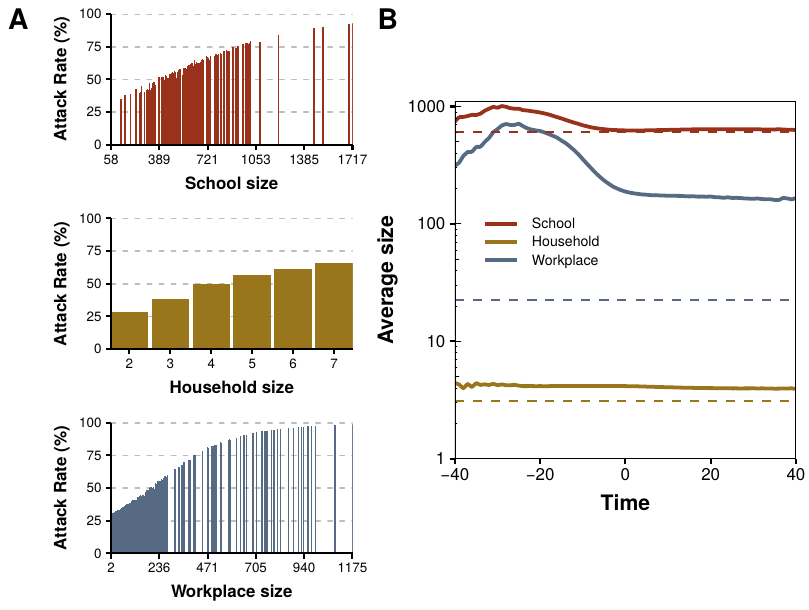

Figure 3.8: Attack rate as a function of site size. A) Fraction of individuals belonging to each place that contracted the disease, not necessarily in said setting. B) Solid line: average size of places in which there is at least one new infection in each time step, broken down in three layers. Dashed line: expected size if there is at least one infection in every place.

To further understand the reasons of the diverse trends observed in each layer, in figure 3.8 we analyze the effect that the size of the components has on the dynamics. In figure 3.8A we study the attack rate (final fraction of removed individuals, i.e., individuals that suffered the infection at some point) as a function of the site size, distinguishing the three layers. The results indicate that the spreading is much more important in large buildings, but we know that they are scarce (see figure 3.6B). Hence, it seems that the initial growth of the epidemic might stop once the big components have been mostly infected. This is corroborated in figure 3.8B, where the average size of buildings with at least one infection is shown. The situation is thus clear. In the classical model not only it is assumed that all population is initially susceptible, but also that it is in contact with the first infected since the beginning. In heterogeneous populations, however, the first infected individual has only a handful of local contacts, diminishing its infectious power. Then, as the epidemic progresses more and more susceptibles enter into play, increasing the amount of individuals that can be infected. Yet, sooner or later the components will run out of susceptibles, even if there is still a large fraction available in the rest of the system. This, in turn, leads to a more abrupt descent than what is expected in the classical approximation.

These results clearly highlight how the heterogeneity of human interactions (i.e., clustering in households, schools and workplaces) alters the standard results of fundamental epidemiological indicators, such as the reproduction number and the generation time. Furthermore, they call into question the measurability of \(R_0\) in realistic populations, as well as its adequacy as an approximate descriptor of the epidemic dynamics. Lastly, our study suggests that epidemic inflection points, often ascribed to behavioral changes or control strategies, could also be explained by the natural contact structure of the population. Hopefully, this analysis will open the path to developing better theoretical frameworks, in which some of the most fundamental assumptions of epidemiology have to be revisited.

3.3 The epidemic threshold fades out

In epidemiology attention has historically been restricted to biological factors. We began this chapter stating that individuals were just indistinguishable particles interacting according to the mass action law. However, throughout the following sections, we have shown that when this oversimplification is relaxed many interesting phenomena arise. In this section we shall go one step further and completely remove what we called assumptions 3 and 4: mass action and indistinguishability. To distinguish individuals, we will assign to each of them an index, \(i\). Then, we will allow individuals to spread the disease only to those with whom they have some kind of contact (e.g. they are friends), which we will encode in links. In other words, we are finally going to introduce networks into the picture.

It is rather difficult to establish the origin of what we may call disease spreading on networks or network epidemiology. Probably, one of the earliest attempts is the work by Cochran, in 1936 [221], in which he studied the propagation of a disease in a small plantation of tomatoes. Although his work might be better described as statistical analysis, the reason to consider it one of the precursors of the spreading on networks is that, as Kermack and McKendrick had done roughly 10 years before, his assumptions were all mechanistic rather than based on the knowledge of the particular problem. This is clearly seen in how he introduced the model: “We suppose that in the first [day] each plant in the field has an equal and independent chance \(p\) of becoming infected, and that in the second [day] a plant which is next to a diseased plant has a probability \(s\) of being infected by it, healthy plants which are not next to a diseased plant remaining healthy”. In modern terminology, the plants were arranged in a lattice structure and could infect their first neighbors with probability \(s\). The assumptions are particularly strong because he knew that the disease was propagated by an insect, but decided to create a very general model.

During the next couple of decades, lattice systems were quite popular in physics, geography and ecology. Then, in the 1960s the interest in studying the spatial spread of epidemics started to grow (see the introduction of [222] for a nice overview) with three main approaches. In the first, the agents that could be infected were set in the center of a certain tessellation of space and could only infect/be infected by their neighbors. This is the closest approach to the modern study of epidemics on networks, but it was not so popular as it was mainly used to study plant systems [223]. The most popular approach was to distribute individuals continuously in space with some chosen density. This led to the study of diffusion processes, focusing on the interplay between velocity and density [224]. The third approach was based on what we briefly defined in section 3.1.1 as metapopulations. Recall that in a metapopulation individuals are arranged in a set of sub-populations. Within each sub-population usually homogeneous mixing is applied and it is also allowed to have individuals migrating from one sub-population to another. Hence, the idea was to simulate the fact that people (or animals) live in a certain place where they can contract the disease, and then travel to a different area and spread it to its inhabitants [168]. Note, however, that in none of these methods we are taking into account any sociological factors of the population.

In the beginning of the 1980s some results pointing into the direction of introducing more complex networks started to appear. In particular, von Bahr and Martin-L"{o}f in 1980 [225] and Grassberger in 1983 [226] (in the context of percolation) showed that the classical SIR model on a homogeneous population could be related to the ER graph model that we saw in section 2.1.2. Indeed, suppose that we have a set of individuals under the homogeneous mixing approach and simulate an epidemic. Next, if an individual \(i\) infects another individual \(j\), we establish a link between them. If the probability of this event is really low, the epidemic will not take place. Conversely, for large probabilities most nodes will be randomly connected. This is precisely how we defined the ER model, with the only difference being that the probability \(p\) of establishing a link will be a function of both the probability of infecting someone, \(\beta\), and that of getting recovered, \(\mu\). Note, however, that they did not implement a disease dynamics on a network, but rather extracted the network from the results of the dynamics.

Roughly at the same time, the gonorrhea rates rose precipitously. To understand the dynamics of this venereal disease, it was recognized that some sort of nonrandom mixing of the population had to be incorporated to the models. The first attempts were based on separating the contact process and the spreading process [227]. Indeed, going back to the definition of the SIR model, we defined the rate of infectivity (3.5) as \(\phi(\tau) = \beta/N\) (under the frequency approach). We can simply define $= c ’ $ where \(c\) is the contact rate between individuals and \(\beta '\) the probability of spreading the disease given that a contact has taken place. For simplicity, we can remove the apostrophe and simply write \(\phi(\tau) = c \beta/N\). With this definition the epidemic threshold would read

\[\begin{equation} \frac{c \beta}{\mu} > 1 \Rightarrow \frac{\beta}{\mu} > \frac{1}{c}\,. \tag{3.53} \end{equation}\]

This expression is giving us a very powerful insight about how to combat diseases. Supposing that \(\beta\) and \(\mu\) are fixed, as they mostly depend on the characteristics of the pathogen, the best way to prevent and epidemic is to reduce the number of contacts as much as possible. A similar result was obtained in the context of vector-borne diseases, in which the number of vectors (e.g. mosquitoes) play the role of the number of contacts [228].

The next step was the introduction of mixing matrices, the same approach that we followed to incorporate age into the SIR model in section 3.1.1. Recall that the idea was to divide the population into smaller groups according to some characteristics (e.g. gender or age) and establish some rules governing the interaction between those groups encoded in a contact matrix (hence the name of mixing matrices). Typically, both the group definitions and the mixing function were very simple. In the context of venereal diseases, the most common characteristic used to form groups was activity level. This approach was popularized by Hethcote and Yorke in 1984 [229] in their modeling of gonorrhea dynamics using the core group: a group of highly sexually active individuals who are efficient transmitters interacted with a much larger noncore group. They showed that with less than 2% of the population in the core group, this model lead to 60% of the infections to be caused directly by core members. Yet, the world of epidemiology was about to be shaken by a new virus that would defy all these assumptions, HIV.

3.3.1 The decade of viruses

The emergence of HIV in the early 1980s forced scientists to pay even more attention to the role of selective mixing. In 1986, in one of the earliest attempts to model this disease [230], Anderson and May summarized the challenges that sexually transmitted diseases (STDs) presented in contrast to other more common infectious diseases such as measles:

For STDs only sexually active individuals need to be considered as candidates of the transmission process, in contrast to simple “mass action” transmission models.